Learning to run before we walk: can virtual reality help recover stereo vision?

Our ability to perceive the marvels of the three-dimensional world surrounding us would not be possible without a phenomenon known as stereopsis. Due to being located at different positions, there is a difference in the visual information which each eye receives. This is known as binocular disparity. Stereopsis is the term for our ability to combine this information coming in from both eyes, allowing us to perceive a more accurate representation of the world. As an integral part of human vision, we rely on stereopsis for precise depth estimation, visuomotor control and some crucial everyday tasks. However, according to recent studies over 5% of the population are stereo deficient, with numbers rising to over 34% in the elderly 1. Although stereo deficient individuals can use many monocular cues, such as perspective or occlusion to judge the depth of their surroundings, the ability to use binocular disparity is what enables us to execute complex motor tasks and judge fine details of depth perception. Stereo-deficiency can thus lead to many difficulties, for instance concerning the ability to play sports, safety amongst the elderly, and career limitations, such as, for drivers, pilots, surgeons, and many more besides.

It has long been believed that stereovision forms early on in life, during critical development of the visual system, ranging anywhere from a few months old to 3–5 years of age. Unless changes are made during this critical period, it is generally believed that stereovision cannot be recovered. Nevertheless, there have been reports of stereo vision recovery in adults from as early as the 19th century, when ophthalmologist Emile Louis Javal treated his sister’s strabismus (a condition where the eyes are turned in different directions) using a stereoscope 2. A more recent and well-documented account of stereo vision recovery was provided in 2006 by Oliver Sacks 3. He described the case of Susan Barry, known as ‘Stereo Sue’, who persistently used focus exercises over time to improve her depth perception. Sack’s article has challenged the conservative view of our ability to recover aspects of our visual system later in life. Even more interestingly, Bruce Bridgeman 4, a psychology professor from the University of California Santa Cruz, without any prior visual training, regained his stereo vision in the cinema while watching the 3D-animated film, Hugo, with polarizing 3D glasses.

These accounts of adult stereo vision recovery have sparked more systematic work in the field of vision science and orthoptics. Most researchers so far have focused on vision recovery techniques, which aim to improve the overall retinal function. For example, patching up one eye for a short period of time, allowing individuals to rely solely on monocular cues. Additionally, researchers from the University of California 5 have introduced a number of perceptual learning techniques for stereovision recovery. Perceptual learning refers to a procedure where stereo deficient individuals repeat the same task over many trials, just as Stereo Sue did. This type of learning has been observed to occur in natural settings. In 2015, researchers Adrien Chopin, Dennis Levi, and Daphné Bavelier observed that dressmakers exhibited better stereoscopic vision than non-dressmakers, concluding that this was most likely attributed to a dressmaker’s continuous exposure to perceptual learning within a natural setting 6.

However, stereo vision, unlike other deficiencies of our visual system, is routed in the brain rather than the retina. Thus, some doubt has been cast on the techniques currently employed in recovery therapy. The majority of techniques focus on very simple tasks, often with no resemblance to real-life scenarios, leading many to question whether stereo vision recovery is a result of the therapy itself or rather merely coincidental.

Recent cutting edge developments in Virtual Reality (VR) have now provided researchers with a new tool for stereo vision recovery work. Although the concept of ‘virtual reality’ has been around since the early 1980s, logistical limitations initially prevented it from really taking off. Since the early 2010s however, virtual reality headsets (or Head Mounted Displays (HMDs)), such as the Oculus Rift 7, or HTC Vive 8, have become both smaller and cheaper, with many now available for purchase and use in everyday gaming. This surge in VR use has spread into other areas, such as training, education, medicine and psychology. Moreover, the modern-day capability and affordability of VR make it an attractive means for vision research and stereo vision recovery studies.

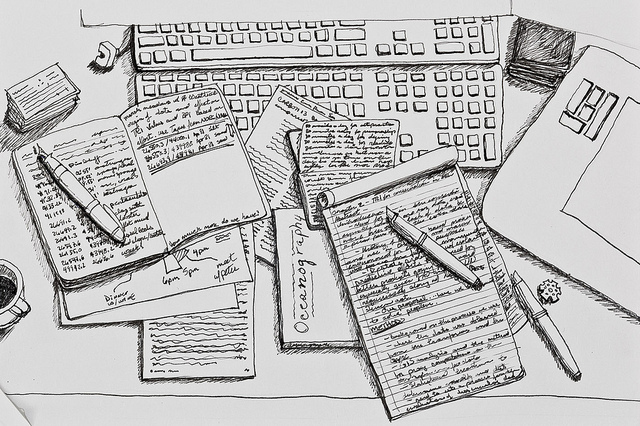

One of the most recent studies on stereo vision recovery using virtual reality comes from a team of researchers from the University of California 9. Experimental set up is shown below. Participants were seated in front of a display screen with shutter glasses, through which they were then presented with ‘ Virtual Bugs’. Whenever these bugs were visible in three-dimensions, participants were asked to use the cylinder provided to squash them. Both monocular and binocular cues were used in the experiment, and the slant of the robot hand was adjusted as part of the experimental procedure. By the end of the experiment, six out of 11 participants showed significant improvement in stereo vision, indicating the potential of the technique. However, it must be noted that the experimental set up, although described as a virtual environment, does not thoroughly match the real-life quality supplied by conventional VR. This is due to the fact that the experiment relies on haptic feedback from the slant and the cylinder, rather than utilising VR tools, such as HMDs or controllers.

These fine differences between the experiment and what we would typically associate with ‘virtual reality’ raises some questions. Are we really using immersive VR in these experiments, or is it just another stereo display with additional haptic feedback? There are many similarities between Head Mounted Displays (HMDs) and the shutter glasses used in this experiment, however HMDs come with the additional benefit of providing a more immersive, depth-rich environment. This is something worth exploring in recovery studies.

Benjamin T. Backus at Vivid Vision 10 offers a solution for this with the Vivid Vision System — a VR system used in visual rehabilitation in over 108 clinics worldwide. This system incorporates HMDs such as the Oculus Rift and the HTC Vive, operated by hand held controllers. Alternatively, a more stationary version of the VR system is also available, which uses a mobile phone app, such as Google Cardboard. Due to the equipment’s affordability, the latter provides an even more feasible solution, with the added advantage of being suitable for home use. The team have developed a series of six games which aim to help participants maintain motor fusion, encourage integration of multiple cues, emphasise disparity for visuomotor tasks, train peripheral vision field, practice acuity, and maintain vergence eye posture control. As of yet, there are no published results from these studies, however, their work heeds promise for a more detailed insight into how VR can aid recovery of stereo vision, or any other visual deficiencies.

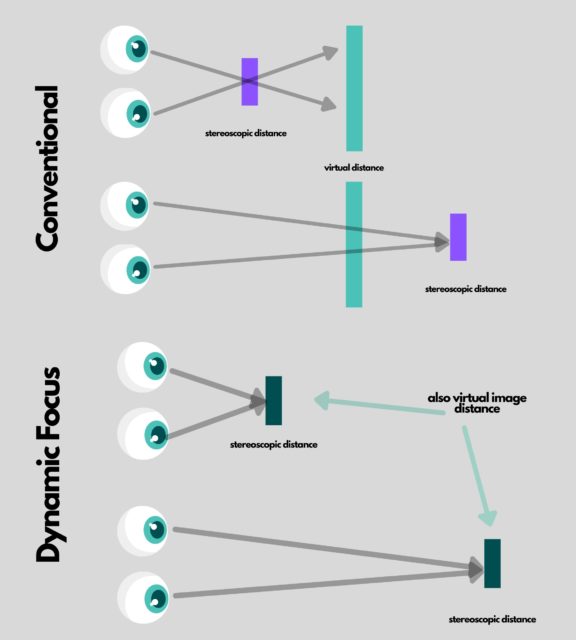

Virtual reality is a promising tool in psychology research, although like with many things, it does not come without its problems. Many participants report experiencing side effects, such as motion sickness, or cybersickness. Furthermore, there have also been accounts of eye strain, dissociation between reality and the virtual world, and confusion. In traditional HMDs, a virtual image is focused at a fixed distance away from the eyes, but the depth of the objects in the scene vary. This results in constantly changing binocular disparity information, forming the basis of accommodation and vergence conflict issues in HMDs, a problem which is more pronounced than in other, more common 3D domains, such as 3D TVs or cinema viewing. Several solutions have been proposed to resolve this, for instance multiple focus, depth blending and retinal tracking. Possibly the most promising of these, however, is the adaptive focus technique proposed in 2017 by a team of researchers from Stanford University and Dartmouth College 11 (Figure 2). Their work describes two prototype HMDs, which adapt to the user via computational optics. Focus-tunable lenses, mechanically actuated displays, and mobile gaze tracking can all be tailored to the user, reducing refractive errors and providing focus cues by updating the system based on where the user is looking. Thus, the future is bright and promises technology more equipped to deal with studies on recovery of visual system deficiencies.

Despite such promise, we still cannot ignore the many problems of VR. What reliability can we place in studies claiming stereo vision has been recovered using these defective tools? Here, we are faced with the issue of how we truly define virtual reality. While the ‘Virtual Bugs’ experiment may describe a controlled approach with promising results, can we really label this as a completely immersive VR experience? Despite Vivid Vision proposing a more immersive approach, the results of these tests are still pending, meaning we are still unable to determine how effective true VR is for the recovery of stereovision.

Before VR entered the picture, stereo vision recovery in adults has been debated for years. By providing multisensory cues and real-life settings for experiments, VR has become an actively researched and incredibly useful tool for visual studies, yet it is not without its shortcomings. Thus, due to the many challenges that we still face in stereo vision recovery research, should we first focus our energy on learning to walk before we run?

This article was specialist edited by Ebony Gunwhy and copy-edited by Claire Thomson.

References

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4901458/

- https://en.wikipedia.org/wiki/Louis_%C3%89mile_Javal

- https://en.wikipedia.org/wiki/The_Man_Who_Mistook_His_Wife_for_a_Hat

- https://www.bbc.com/future/article/20120719-awoken-from-a-2d-world

- https://www.npr.org/templates/story/story.php?storyId=99083752&t=1633015760606

- https://www.nature.com/articles/s41598-017-03425-1/?utm_source=Twitter&utm_medium=Social_media_organic&utm_content=Multidisciplinary-Scientific_Reports-Multidisciplinary-Usage_driving-Global&utm_campaign=MatAst-Nature-twitter_noncfp_content&sf114011805=1

- https://www.oculus.com/?locale=en_GB

- https://www.vive.com/uk/product/vive-pro2-full-kit/overview/?wpsrc=Google%20AdWords&wpcid=12520413318&wpsnetn=g&wpkwn=htc%20vive&wpkmatch=e&wpcrid=546913172628&wpscid=119661228152&wpkwid=kwd-15492340847&gclid=Cj0KCQjwwNWKBhDAARIsAJ8Hkhe-IusgQ51QYVALJDxTHIouZMYpFFYyDPfV-7tnLykyDTlyGgvhIhUaAlUsEALw_wcB

- https://pubmed.ncbi.nlm.nih.gov/27269607/

- https://www.seevividly.com/team

- https://www.pnas.org/content/114/9/2183.short