Like a God from the Machine

On a flight back from my home country — the least religious nation in the Western world1 — I sat revising evolutionary psychology when suddenly I felt a voice whisper into my ear: “You don’t actually believe in this, do you?” I had never met a creationist before, and was dumbfounded by the salvo fired on me by my co-passenger. Soon enough he had me flustered like a cornered cat, and yet I felt my face contort itself into a self-righteous smirk as I thought: surely this was a man in denial, clinging to a delusion? He didn’t actually believe this, did he? Oh well — rolling my eyes, I turned a deaf ear towards him.

But now the mortifying symmetry suggested itself. What justification did I have for being this arrogant when my own atheism probably owed just as much to my upbringing and leaps of faith as my co-passenger’s Christianity? But how could two people — with identical experiences of being sure — endorse worldviews so diametrically at odds?2

This question belongs to cognitive science, and the answer would constitute something resembling a “deus ex machina”. When the ancient Greek playwrights found themselves incapable of ingeniously resolving their how-will-the-hero-survive plot conflict, theatre mechanics would lower down a “god from the machine” to rescue said hero. By employing a supernatural agent that sidesteps the narrative’s constraints, the denouement feels cheap and anti-climactic, but serves its purpose. Contemplating how disagreements arise from cognition might similarly resolve deadlocked political and religious debates by cutting the Gordian knot in acknowledgement that it may not be possible to untie it rationally.

Cognitively speaking, what is it like to think differently? Consider this snippet from a typical evolution versus creation debate:

Creationist: “The cause of intelligence must be intelligent.”

Atheist: “No, however natural phenomena must have natural causes (not supernatural ones).”

Creationist: “The idea of nothingness before Big Bang is preposterous.”

Atheist: “Sure, but we are not intelligent enough to comprehend it.”

Admit it: the atheist’s defense could come off as after-the-fact face-saving theory modifications, just like a believer’s “But I feel it to be true!” frustrates atheists as a lazy cop-out. Atheists accept counter-intuitive ideas because scientific theories gain their validity not from subjective plausibility, but from the range, precision and success of the predictions that they generate. However, few people have direct exposure to research frontiers, so the acceptance occurs at face value. With politics, disagreement is even more understandable, as you can’t really run a controlled experiment on a nation to adjudicate which policy is better.

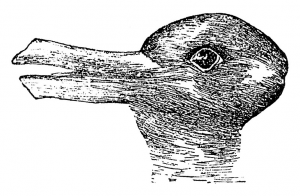

But what is the neurophysiological basis for conflicting logics? As seen in ambiguous images like the rabbit-duck, identical perceptual input can trigger divergent inferences. These inferences reflect how neural layers structure the flow of activity from network P to network Q (e.g. retinal image activating “it’s a duck”, or “raise taxes” activating ”more money in the public sector”) so that, somehow, your brain’s connectivity determines what’s self-evidently true for you.

The Rabbit-duck illusion illustrates how the brain can interpret the same evidence differently, from simple perceptual categorisation to complex political judgments. credit: Wikipedia Commons

This leaves plenty of room for individual differences. Linguist George Lakoff (an outspoken liberal3) has argued that abstract reasoning occurs when relatively sparse brain networks borrow structure from denser ones, resulting in a transfer of logic from concrete to abstract domains — metaphors that unconsciously shape our inferences4.

Lakoff’s research indicates that partisans vary subtly in what metaphors they use to mentally represent “The Nation”. Republicans think of “The Nation” as an authoritative father that for stability’s sake should be obeyed, and who in turn wins competitions to support his family. Therefore, Lakoff argues, conservatives are concerned with the military, punishment (e.g. death penalty), gender differentiation (e.g. anti-gay marriage), and cheating-detection (e.g. defining “fairness” in terms of proportionality to discourage “free-riders”).

Meanwhile, Democrats conceptualise “The Nation” as a nurturant, gender-less parent that empathically cares for the children and teaches them to take responsibility through restitution rather than punishment, and to define “fairness” in terms of equality (e.g. redistribution through taxes) rather than proportionality.

Few liberals are pro-parasitism and few conservatives are pro-inequality-for-equal-effort, yet these unconscious metaphors produce divergent inferences about optimal policy making. Which metaphor develops in an individual is speculated to involve dopamine-controlling genes. Genetics account for just above 50% of the variation in ideological predispositions5, and research shows conservatives to be more sensitive to disgust, threats and negative events — traits associated with the neurotransmitter dopamine — which could potentially explain conservatives’ preference for status quo and their traditionalist metaphors6.

Liberals, meanwhile, exhibit more grey matter in the anterior cingulate cortex (ACC), an area responsible for detecting conflicts and uncertainty. Researchers have interpreted this to mean that liberals may be more tolerant to, for example, tensions between rational judgments and emotional reactions, which liberal values often require (e.g. instinctively supporting death penalty, but letting empirical evidence override this)7.

Paranormal beliefs are often explained as a tendency to perceive agency in random noise, for example, interpreting Jesus’ face on toasted bread as a sign from above8, where dopamine again appears to play a role. Evolution made us hyper-sensitive to patterns because false negatives (not seeing the enemy hiding in the bushes) are more costly than false positives (being unnecessarily startled by wind-ruffled shrubs), but excessive dopamine appears to increase the perceived signal, exacerbating this unconscious bias9. Religious people may therefore experience reality as more teeming with uncanny coincidences, and research demonstrating reduced ACC activity in zealots suggests that explanations that invoke super-intelligence help lower the anxiety triggered by these coincidences10.

Some people argue the Cone Nebula looks like Jesus Christ — an example of perceiving a religious signal in what most likely is random noise. Credit: Wikipedia Commons

Once dopamine-induced biases have outputted political and religious intuitions, consciousness is motivated by the brain’s reward system to rationalise these beliefs. Here, consciousness is analogous to a lawyer rewarded for defending their client, irrespective of the client’s guilt, such that consciousness may end up defending non-veridical beliefs for the sake of reducing inconsistency with unconscious judgments11.

Indeed, adhering to prior beliefs despite contradictory evidence is pleasurable. When partisans lay inside a brain scanner while reading a statement by their favored candidate followed by another statement that threatened previous claims, the inconsistency between evidence and prior beliefs was found to activate areas associated with emotional distress. Mental acrobatics then appeared to suppress this conflict and tickle the reward circuits that give drug addicts their “fix”12.

The greater our commitment, the greater the pleasure13. Among the followers of a cult that prophesied an imminent apocalypse, those who were more invested – who had left their homes and jobs – were more likely to interpret the apocalypse’s failure to arrive as meaning that their faithfulness had salvaged them14. Thus, positive feedback causes subjective certainty to become the dominant factor in belief revision, making attitudes increasingly immune to evidence.

This makes one-sided environments like political parties and religious communities – which may isolate beliefs from reality-testing beyond a point of no return – similar in effect to delusional syndromes, where that feeling of knowing is weighted so disproportionately that it overpowers any counter-evidence. For example, when a doctor told a patient with Cotard’s syndrome (in which you feel dead) that her beating heart proved she was alive, the patient replied that because she was dead, a beating heart is no longer proof of liveness15.

Could cognitive self-awareness help people break free from their self-righteousness? Not much research addresses this question, but one study had subjects consider adult siblings having consensual, protected sex, alongside a meta-cognitive argument that the repugnance this triggered is an evolutionary adaptation meant to prevent siblings from conceiving unlikely-to-survive children, but that modern contraceptives have made this adaptation irrelevant16. After two minutes subjects rated the scenario as more morally acceptable compared with subjects given a non-cognitive argument (“The more love, the better”). If acknowledging cognitive biases helps people transcend them in moral reasoning, perhaps it generalises to political and religious commitments.

Deus ex machinas have a bad rep, but meta-level intervention may not imply poor closure as much as an irresolvability built into the conflict itself. Though two brains that process evidence differently may never concur, this does not mean we should dismissively ask our opponents to get their dopamine system sorted. Existing research presents no open-and-shut case, and pompous hand-waving to explain away opinions may come off as condescending. Furthermore, like placebos, non-veridical beliefs may carry legitimate benefits – hope, contentment, and cohesion – and without that hunch of “knowing” we may become excessively non-committal when, in politics and life alike, indecision can be just as dangerous as fanaticism.

Nevertheless, examining disagreement in terms of cognition may allow us to constructively empathise with our opponents without espousing or excusing their views, thus disciplining us to avoid becoming close-minded hypocrites. As our plane touched down on Glaswegian ground, I didn’t think there was hope for me and my co-passenger to ever share a worldview. For a while I fantasised about what narrative my co-passenger’s belief system had created to explain away my atheism, but eventually returned to my evolution notes: for me, the idea that his god emerged from his brain’s machinery was a denouement good enough.

This article was specialist edited by Yulia Revina and copy edited by Manda Rasa Tamosauskaite

References

- Sweden, according to Gallup’s 2014 End of Year Survey http://www.thelocal.se/20150413/swedes-least-religious-in-western-world

- Intriguingly, intelligence seems near-irrelevant to a person’s ability for balanced judgment. Stanovich, K , & West, R. (2008). On the failure of cognitive ability to predict myside and one-sided thinking biases. Thinking and Reasoning. 14 (2), 129 – 167.http://keithstanovich.com/Site/Research_on_Reasoning_files/SWTandR08.pdf

- Some have argued that the over-representation of liberals among social psychologists may have biased research to depict conservatives in a less flattering light. http://www.newyorker.com/science/maria-konnikova/social-psychology-biased-republicans

- Lakoff’s work is described in his books “Moral Politics: How Liberals and Conservatives Think” (University of Chicago Press, 1996) and “The Political Mind: A Cognitive Scientist’s Guide to Your Brain and Its Politics” (Penguin Books, 2009).

- The famous Minnesota twin study estimates that self-identified ideology has a heritability of 0.56, meaning that 56% of the population-level variation is due to genetic variation. Funk, C.L., Smith, K.B., Alford, J.R., Hibbing, M.V., Eaton, N.R., Krueger, R.F., Eaves, L.J., & Hibbing, J.R. (2012). Genetic and Environmental Transmission of Political Orientations. Political Psychology, 34(6), 805-819. http://onlinelibrary.wiley.com/doi/10.1111/j.1467-9221.2012.00915.x/abstract

- This research is reviewed in John R. Hibbing’s book “Predisposed: Liberals, Conservatives, and the Biology of Political Differences” (Routledge: 2013), and this journal article: http://www.psych.nyu.edu/vanbavel/lab/documents/Jost.etal.2014.BBS.pdf Hibbing, J.R., Smith, K.B., & Alford, J.R. (2014). Differences in negativity bias underlie variations in political ideology. Behavioral and Brain Sciences, 37(1), 297-350.

- Kanai, R., Feilden, T., Firth, C. & Rees, G. (2011). Political Orientations Are Correlated with Brain Structure in Young Adults. Current Biology, 21(8), 677-680. http://www.sciencedirect.com/science/article/pii/S0960982211002892

- Evidence that religious people are more prone to error in probability judgment tasks comes from e.g. the following study on the “conjunction fallacy”. Rogers, P.., Davis, T., & Fisk, J. (2009). Paranormal Belief and Susceptibility to the Conjunction Fallacy. Applied Cognitive Psychology, 23(4). 524-542.

- The role of dopamine in religiosity is discussed in: Krummenacher, P., Mohr, C., Haker, H., & Brugger, P. (2010). Dopamine, Paranormal Belief, and the Detection of Meaningful Stimuli. Journal of Cognitive Neuroscience, 22(8), 1670-1681. Evidence of the anxiety-reducing function of religion comes from the fact that people induced to feel lonely are more likely to frame non-human things as agent (to “anthropomorphise”). Epley, N., Akalis, S., Waytz, A. & Cacioppo, J.T. (2008). Creating Social Connection Through Inferential Reproduction: Loneliness and Perceived Agency in Gadgets, Gods, and Greyhounds. Psychological Science, 19(2), 114-120. http://pss.sagepub.com/content/19/2/114.short

- Beware that this association is difficult to interpret. It may be that ACC firing is what predisposes people to religious belief.

Evidence of the role of the anterior cingulate cortex in religion comes from: Inzlicht, M., McGregor, I., Hirsh, J.B., & Nash, K. (2009). Neural Markers of Religious Conviction. Psychological Science, 20(3), 385-392. http://www.yorku.ca/ianmc/readings/Inzlicht,%20McGregor,%20Hirsh,%20&%20Nash,%202009.pdf

- In the psychological literature, this theory is known as “intuitionism”. One prominent scholar here is Jonathan Haidt, who has written an excellent book on the psychology of self-righteousness: “The Righteous Mind: Why Good People are Divided by Politics and Religion” (Penguin: 2013).

- This research is discussed in Drew Westen’s book “The Political Brain: The Role of Emotion in Deciding The Fate of The Nation” (Public Affairs: 2007) and the particular experiment is found in this article: Westen, D., Blagov, P.S., Harenski, K., Kilts, C. & Hamann, S. (2006). Neural Bases of Motivated Reasoning: An fMRI Study of Emotional Constraints on Partisan Political Judgment in the 2004 U.S. Presidential Election. Journal of Cognitive Neuroscience, 18(11), 1947-1958.http://web.b.ebscohost.com.ezproxy.lib.gla.ac.uk/ehost/detail/detail?sid=b2d50efb-a21d-4c36-91f2-c92f4c08003e%40sessionmgr102&vid=0&hid=118&bdata=JnNpdGU9ZWhvc3QtbGl2ZQ%3d%3d#AN=22955282&db=pbh

- The finding that zeal correlates with neural reward is found in: Gozzi, M., Zamboni, G., Krueger, F., & Grafman, J. (2010). Interest in politics modulates neural activity in the amygdala and ventral striatum. Human Brain Mapping, 31(11), 1763-1771. http://www.ncbi.nlm.nih.gov/pubmed/20162603

- This cult was studied by the psychologist behind the “cognitive dissonance” theory, Leon Festinger, and is described in his book “When Prophecy Fails” (University of Minnesota Press: 1956).

- This research is discussed in the book “On Being Certain: Believing You Are Right Even When You’re Not” by Robert A. Burton (St. Martin’s Press: 2008).

- Paxton, J.M., Ungar, L. & Greene, J.D. (2012). Reflection and Reasoning in Moral Judgment. Cognitive Science, 36(1), 163-177. http://onlinelibrary.wiley.com.ezproxy.lib.gla.ac.uk/doi/10.1111/j.1551-6709.2011.01210.x/full