Should We Be Worried About Artificial Intelligence?

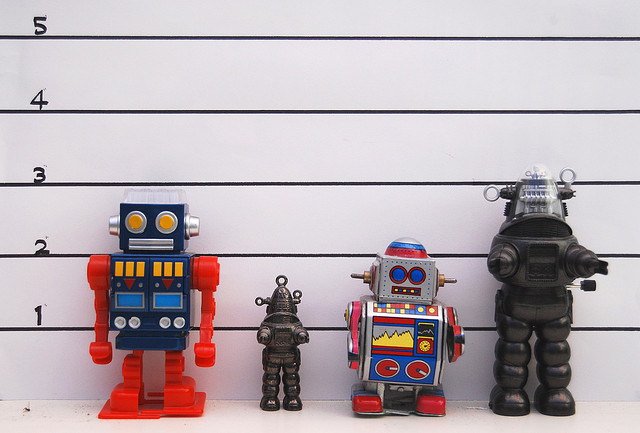

The image of a dystopian future in which artificially intelligent machines turn against the humans who created them is definitely not new, but it is a theme that has captivated science fiction writers for decades. From Ridley Scott’s ‘Alien’ in the 1970s to Channel 4’s latest TV drama ‘Humans’, screenwriters have capitalised on our fear and fascination when it comes to artificial intelligence (AI). But as technology progresses with ever greater speed, the possibility of AI making the transition between fiction and reality doesn’t seem that far away. Is a robot uprising really likely in our lifetimes? Even if it isn’t, should we still be concerned about the development of artificial intelligence?

The Global Challenges Foundation certainly seems to think so. In a recent report, artificial intelligence was listed alongside nuclear war, global pandemic and major asteroid impact as risks that threaten human civilisation1. Their concern is shared by Microsoft co-founder Bill Gates who, in an Ask Me Anything interview on Reddit, said that although in the beginning AI will be “positive if we manage it well” before long the machine’s intelligence would become “strong enough to be a concern”. This sentiment is shared by the likes of Tesla CEO Elon Musk who called for regulations over AI in 2014, and by Professor Stephen Hawking who believes “the development of full artificial intelligence could spell the end of the human race”2.

It seems as though we would be right to worry but according to the Professor of Cognitive Robotics at Imperial College London, Murray Shanahan, designing machines to think like humans is actually “incredibly hard”. At the moment, AI is capable of completing dedicated tasks using complex algorithms: for example, Apple’s Siri is able to search the internet and play music by following the user’s voice commands. Actual human level AI with emotional intelligence and common sense is infinitely more complex. Shanahan believes that the chances of scientists developing anything close to this kind of technology is a long way off, saying that even in the second half of this century its development is “increasingly likely but still not certain”3.

So, is AI a threat to us? It would undoubtedly make our lives much easier in the short term while we were still able to control it. Fortunately (or unfortunately), it appears we are unlikely to ever see super intelligent AI in our lifetime, but perhaps we needn’t be too worried for our descendants after all. Apple co-founder Steve Wozniak believes that super intelligent robots would seek to preserve the human race instead of destroying it, pampering us and keeping us as pets4. That sounds like the kind of future many people could get on board with.

Edited by Debbie Nicol

References

- Armstrong S and Pamlin D, 12 Risks That Threaten Human Civilisation, Global Challenges Foundation, 2015

- Dredge, S. (2015, 29 Jan). “Artificial intelligence will become strong enough to be a concern, says Bill Gates.” The Guardian. Retrieved from http://www.theguardian.com/technology/2015/jan/29/artificial-intelligence-strong-concern-bill-gates

- Sample, I. (2015, 26 Jun). “AI: will the machines ever rise up?” The Guardian. Retrieved from http://www.theguardian.com/science/2015/jun/26/ai-will-the-machines-ever-rise-up

- Smith, P. (2015, 23 Mar)“Apple co-founder Steve Wozniak on the Apple Watch, electric cars and the surpassing of humanity.” The Australian Financial Review. Retrieved from http://www.afr.com/technology/apple-cofounder-steve-wozniak-on-the-apple-watch-electric-cars-and-the-surpassing-of-humanity-20150323-1m3xxk