AI in Engineering: Supplementing the role of the engineer

Beyond the hype and perceived threat of artificial intelligence (AI), there are many useful applications for intelligent methods. These range from the super large language models powering search engines and automated writing, to simple regression models that find trends in data. No field is immune from the current expansion of AI – including engineering. While it is met with scepticism and distrust from some engineers, AI has the potential to greatly assist them in their work and help solve very challenging problems. Here I will make the case for why AI can be valuable in engineering if properly understood, while considering what can go wrong if we employ AI blindly.

Discussion of AI invariably conjures up images of super-intelligent robots and technological apocalypses, likely due to its portrayal in Hollywood. While this is quite far from the reality of where AI is currently, it is interesting to note that most people are aware of AI yet cannot explain exactly what it is. Even in the field, AI is a very fuzzy concept that lacks a single formal definition. A simple way of thinking of AI is as a collection of methods that takes inspiration from human intelligence to improve a solution to certain problems. Some of the most common architectures which fall under this broad category of AI are neural networks, originally designed to replicate processes found in brains; evolutionary algorithms, which mimic biological evolution; and knowledge-based systems, which store and use data to derive patterns that can be matched to new data. There are many methods that are classified as AI, but we do not have space here to discuss them all.

Engineering uses a variety of tools from maths and sciences to solve real-world problems. AI is one such applicable tool in a wide variety of engineering domains. A few examples are as follows:

· Optimisation – maximising or minimising a characteristic of a system so it operates at peak performance. This could be minimising energy use for heating a house or maximising the output of a manufacturing process.

· Autonomy – where we design a machine to operate on its own without human supervision. One relevant example is planetary rovers which you cannot send a technician to fix.

· Uncertainty – similar to autonomy, dealing with uncertainty requires machines to display some level of what could be called intelligence. As one example of uncertainty, any machines that interact with humans, such as self-driving cars, need to take the uncertainty of human behaviour into account.

· Knowledge extraction – retrieving useful information from vast corpuses of data can be sped up using computers. This can accelerate research and development by quickly finding relevant literature for a given project.

· Complexity – when problems become too complex for traditional solutions to work, AI can be used instead. Control of large systems, such as national grids with intermittent energy sources and varying demand, is one example of a complex problem that benefits from AI.

Clearly, the uses of AI for engineering problems are numerous and beneficial. But is there motivation from engineers to use these tools, and will people embrace them?

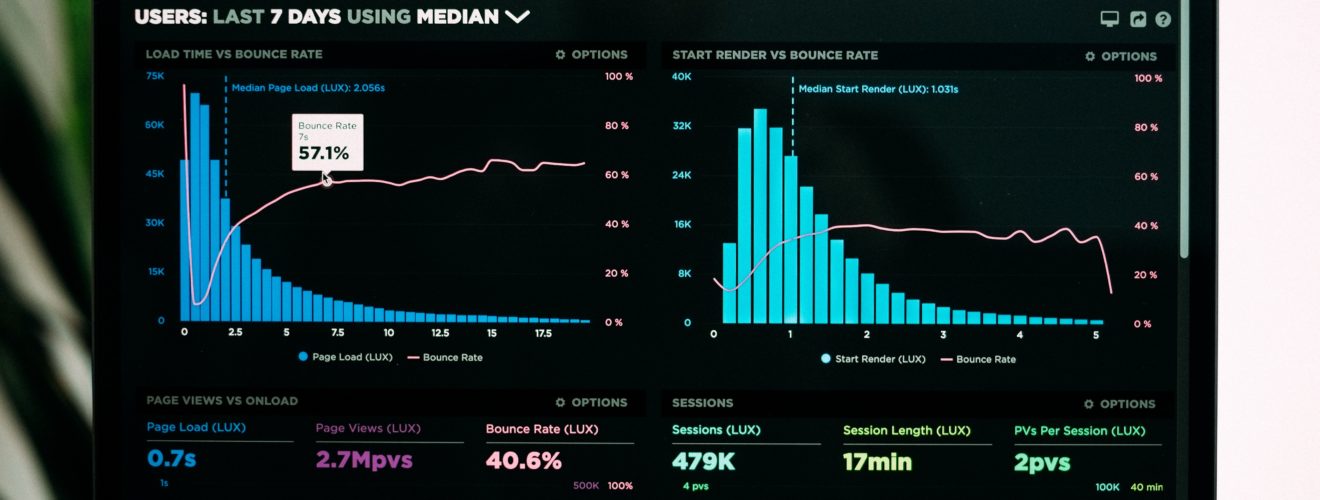

To gauge how AI is viewed by engineers, I searched for AI as a keyword in IMechE news articles1

Most headlines were positive about its potential, like: “How artificial intelligence can help the hunt for new materials”, “AI drones to speed up search and rescue missions”, and “US researchers use AI to identify alternatives to liquid batteries”. These also highlight the range of applications of AI within engineering. However, skills gaps have been identified in engineers regarding these new technologies, as discussed in Dr Jenifer Baxter’s response to a Select Committee report on artificial intelligence. She says:

“The report highlights that as AI decreases demand for some jobs, but creates demand for others, retraining will become a lifelong necessity. I would suggest that it will become more important for the next generation to understand how AI can be used to enhance their work, rather than fear the effects on their roles and whether their jobs will be replaced.”2

Looking particularly at where she notes “it will become more important for the next generation to understand how AI can be used”, this would suggest engineers currently lack this understanding. This could pose a problem given the already widespread use of AI.

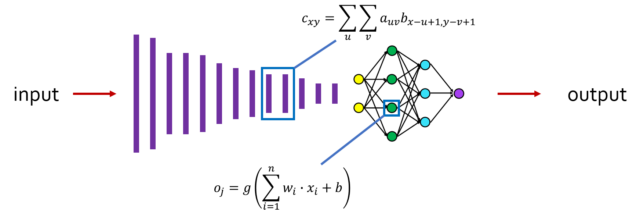

Many practitioners of AI will often treat it as a so-called “black box”. This is a term given to a system where data go in and results come out, but its inner workings are hidden from the user. One of the most widely used classes of methods, called deep learning, is very powerful but lacks what is called “explainability”. This means that, although they may perform well on a given problem, the decision-making process of the algorithm is non-transparent and cannot easily be interrogated or understood by humans. There is currently a lot of research interest in explaining how deep learning systems work but this is not straightforward. As an example, consider the convolutional neural network (CNN) shown below: its structure has several “layers” which create increasingly abstract representations of input data. Each layer is a mathematical transformation of the previous layer designed to extract useful features from the data. In the latter part of this CNN example, there is a neural network. This part in effect uses the feature representation from the previous layers to generate a decision output – again via a series of mathematical transformations. These can accurately classify images or predict values, but cannot be easily interpreted by humans except as complex mathematical expressions.

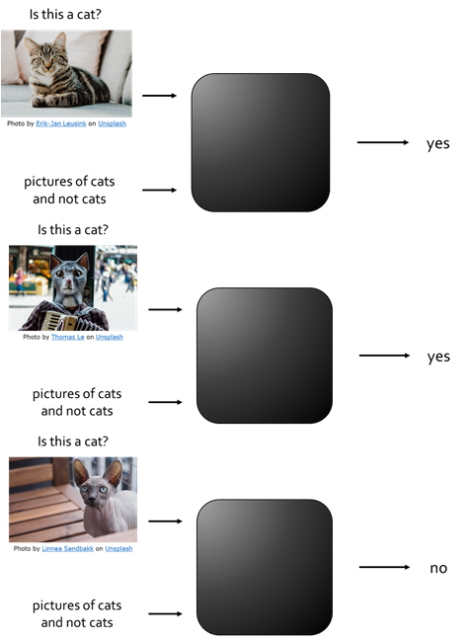

Why is this a problem? After all, many of the most advanced AI systems today are treated as black boxes, but they still “work”. We simply feed the black box multitudes of data for a given problem and it outputs solutions. To illustrate the problem with this, I will use a common toy example of training a black box AI to classify whether or not an image is of a cat. We train a black box model on labelled pictures of cats and not cats before showing a new picture. In the case shown below, it correctly recognises this as a cat. Depending on the dataset and how it has been trained, we might well be able to fool this black box model. As shown below, we could present an image of a person in a cat mask, which it incorrectly identifies as a cat, or a sphynx cat, which it asserts is not a cat. We can chuckle, in this case, at the computer’s error and try retraining it. But what if the stakes were higher?

In this Reuter’s report from 20183, they discuss Amazon’s attempt to make an AI-driven automated recruiting tool. Screening job applications is time-consuming, so you can see why a large company like Amazon would try to automate this task. Their algorithm would take in applicants’ CVs and rate them, suggesting the best CVs to go forward. The problem lies in the fact that they trained this AI using data from 10 years of candidate CVs, which were dominated by men. This caused behaviours such as downgrading CVs containing the word “women’s”, such as someone on a women’s sports team, or applications from all-female colleges. Although developers behind the algorithm edited it such that those terms would not have an effect, there is still the possibility due to its black box nature that it could find other ways to discriminate against women. As noted later in the article, similar algorithms could favour words which appear more often on male CVs. Such are the issues with using black box AI.

So what is the better alternative? For problems that are suited to AI approaches, it is tempting to throw the black box at them and see the amazing results that emerge. However, as engineers, it is clearly better to understand the problem well before using tools to solve it. Such is the case with, for example, finite element analysis, which uses computer models of mechanical parts and components to simulate how they respond to forces. In these analyses, an important first step is evaluating the problem, locating likely areas of maximum stress, or possibly performing hand calculations to validate simulations. This is where AI overlaps with the broader (and older) field of data science. When faced with a new dataset, we can gain more insight into the problem through analysis of the data and better pre- and post-processing. Various statistical methods exist which give insight into causal relationships or more relevant features present in the data. This is useful as we can now improve our own understanding of the problem, while also creating a model which achieves what we set out to do. Furthermore, as in the recruitment example above, algorithms inherit bias from the data on which they are trained, thus raising many ethical issues. Whatever the application, we must strive to make AI equitable by ensuring training data is representative, carrying out independent audits of algorithms, and establishing standard ethical guidelines for AI practitioners, especially engineers, to follow.

In summary, it is clear that AI consists of various tools which are incredibly useful to engineers. These tools will be used in the future to solve some of the toughest challenges we currently face, like climate change. Unfortunately, we cannot solve climate change by handing the problem and a lot of data to a black box.

However, we can use AI to improve climate models to understand how the planet changes; accelerate societal adaptation to future necessary lifestyle changes; and reduce emissions from electricity by optimising power grids. This will only be possible if we have engineers who properly understand how to use AI.

This article was specialist edited by Natasha Padfield and copy-edited by Deep Bandivadekar

References

- https://www.imeche.org/search?query=artificial%20intelligence

- https://www.imeche.org/news/news-article/institution’s-response-to-the-select-committee-on-artificial-intelligence-(house-of-lords)-report

- https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G