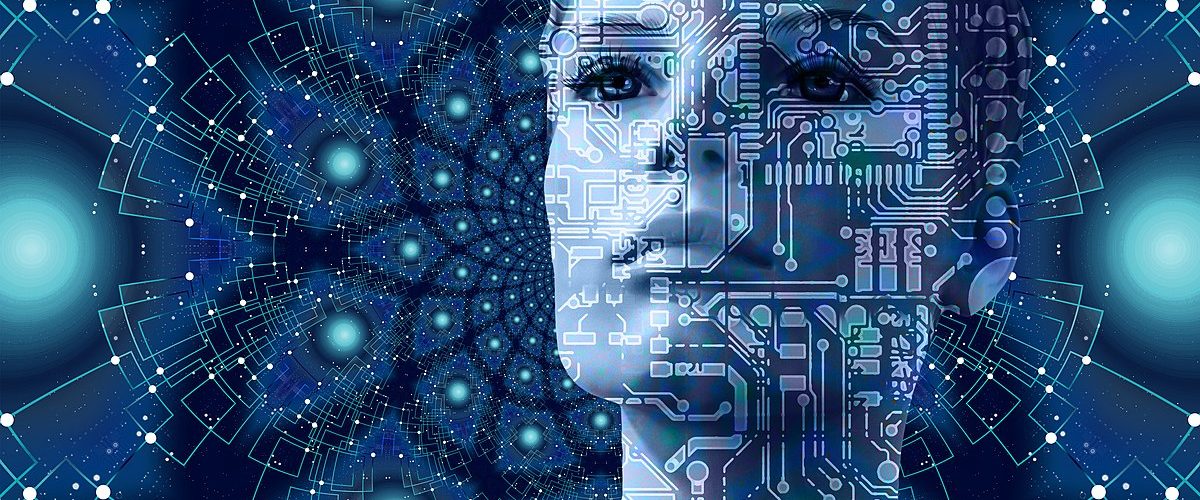

How can a computer create art?

There are multiple methods one can use to generate an image with artificial intelligence (AI), but here we will cover perhaps the simplest method – by using a Variational Autoencoder (VAE) – and explain how it can generate images of faces of people who do not exist.

An autoencoder is a simple neural network with two parts, an encoder and a decoder [1]. The encoder is given an image which it reduces down to a small set of numbers known as the latent space representation. The decoder then translates this latent space representation to recreate the image. The encoder and decoder are trained together using many images with the aim of minimising the difference between the encoder input and the decoder output i.e., accurately recreating the input images.

Human faces are, by and large, very similar. They usually have two eyes, a nose, a mouth, etc. Each of these features can vary in shape, size, and skin tone of course, but the commonalities among human faces means that some information in the image of a face can be compressed. Training an autoencoder on images of faces results in the encoder learning to produce latent space representations which contain information on the differences between all of the images it was trained on, while the decoder stores information on the commonalities.

If a decoder takes just a set of numbers (the latent space representation) and uses them to generate an image of a face, does this mean we can generate new faces by simply varying these numbers? As it turns out, this is exactly how we do it. However, traditional autoencoders didn’t do a very good job of this. Small changes in the chosen numbers led to significant differences in the decoder output so that the images produced no longer looked much like human faces at all [2]. You needed to use very similar latent space values to those which the decoder was trained on in order to get anything sensible, but then you just reproduced the training images.

This is where the VAE comes in. Instead of an encoder just outputting the latent space representation of images, small random numbers are added to the latent space values every time an image is processed. This randomness forces the decoder to generalise better so output images are still close enough to the training images [1]. If random latent space representations which the decoder has never seen before are generated, since it has learned to deal with the randomness, it can create an image which looks like a human face, but of a person who does not exist.

[1] medium.com/@judyyes10/generate-images-using-variational-autoencoder-vae

[2] www.scaleway.com/en/blog/vae-getting-more-out-of-your-autoencoder/

Edited by Hazel Imrie

Copy-edited by Molly Donald